26

28

Last week @MetaAI publicly released huge LMs, with up to ☄️30B parameters. Great win for Open-Source🎉

These checkpoints are now in 🤗transformers!

But how to use such big checkpoints?

Introducing Accelerate and

⚡️BIG MODEL INFERENCE⚡️

Load & USE the 30B model in colab (!)⬇️

29

The Technology Behind BLOOM Training🌸

Discover how @BigscienceW used @MSFTResearch DeepSpeed + @nvidia Megatron-LM technologies to train the World's Largest Open Multilingual Language Model (BLOOM):

huggingface.co/blog/bloom-meg…

30

Last week, @MetaAI introduced NLLB-200: a massive translation model supporting 200 languages.

Models are now available through the Hugging Face Hub, using 🤗Transformers' main branch.

Models on the Hub: huggingface.co/facebook/nllb-…

Learn about NLLB-200: ai.facebook.com/research/no-la…

31

🧨Diffusion models have been powering impressive ML apps, enabling DALL-E or Imagen

Introducing 🤗 diffusers: a modular toolbox for diffusion techniques, with a focus on:

🚄Inference pipelines

⏰Schedulers

🏭Models

📃Training examples

github.com/huggingface/di…

32

🖌️ Stable Diffusion meets 🧨Diffusers!

Releasing diffusers==0.2.2 with full support of @StabilityAI's Stable Diffusion & schedulers 🔥

Google colab:

👉 colab.research.google.com/github/hugging…

Code snippet 👇

33

34

日本からの嬉しいお知らせです!rinnaが日本語で学習したJapanese Stable DiffusionがHugging Face Spacesでデモ化されました! huggingface.co/spaces/rinna/j…

35

Hugging Faceから日本へのお知らせです!

Hugging Faceコースの日本語翻訳を始めました。東北大学のStudent Ambassadorsの皆さんのお陰で第一章の翻訳が終了しました。

今後もコツコツと翻訳していきます。

是非コースを読んでHugging Face Tranformersについて学んで、使ってみてください!

36

Today we are excited to announce a new partnership with @awscloud! 🔥

Together, we will accelerate the availability of open-source machine learning 🤝

Read the post 👉 huggingface.co/blog/aws-partn…

37

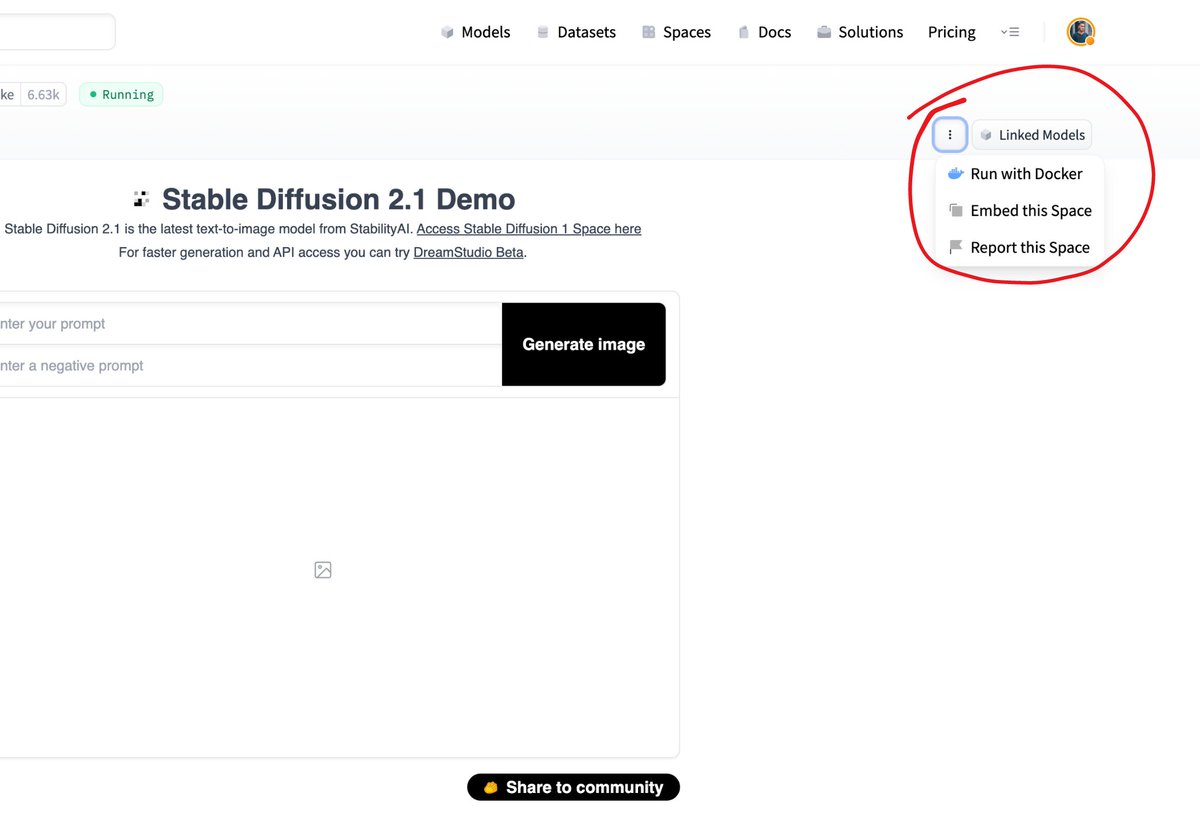

THIS IS BIG! 👀

It's now possible to take any of the >30,000 ML apps from Spaces and run them locally (or on your own infrastructure) with the new "Run with @Docker" feature. 🔥🐳

See an app you like? Run it yourself in just 2 clicks🤯

38

SAM, the groundbreaking segmentation model from @Meta is now in available in 🤗 Transformers!

What does this mean?

1. One line of code to load it, one line to run it

2. Efficient batching support to generate multiple masks

3. pipeline support for easier usage

More details: 🧵