1

2

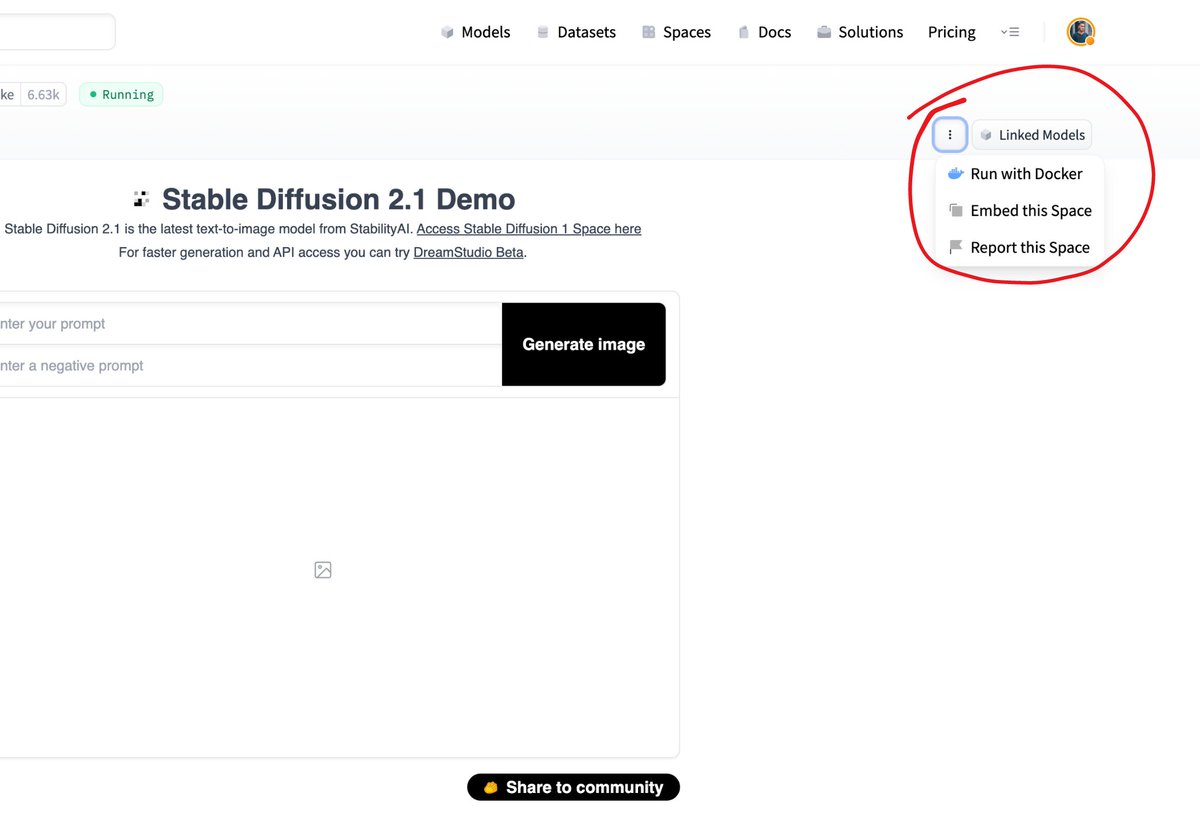

THIS IS BIG! 👀

It's now possible to take any of the >30,000 ML apps from Spaces and run them locally (or on your own infrastructure) with the new "Run with @Docker" feature. 🔥🐳

See an app you like? Run it yourself in just 2 clicks🤯

3

Today we are excited to announce a new partnership with @awscloud! 🔥

Together, we will accelerate the availability of open-source machine learning 🤝

Read the post 👉 huggingface.co/blog/aws-partn…

4

Hugging Faceから日本へのお知らせです!

Hugging Faceコースの日本語翻訳を始めました。東北大学のStudent Ambassadorsの皆さんのお陰で第一章の翻訳が終了しました。

今後もコツコツと翻訳していきます。

是非コースを読んでHugging Face Tranformersについて学んで、使ってみてください!

5

日本からの嬉しいお知らせです!rinnaが日本語で学習したJapanese Stable DiffusionがHugging Face Spacesでデモ化されました! huggingface.co/spaces/rinna/j…

6

7

🖌️ Stable Diffusion meets 🧨Diffusers!

Releasing diffusers==0.2.2 with full support of @StabilityAI's Stable Diffusion & schedulers 🔥

Google colab:

👉 colab.research.google.com/github/hugging…

Code snippet 👇

8

🧨Diffusion models have been powering impressive ML apps, enabling DALL-E or Imagen

Introducing 🤗 diffusers: a modular toolbox for diffusion techniques, with a focus on:

🚄Inference pipelines

⏰Schedulers

🏭Models

📃Training examples

github.com/huggingface/di…

9

Last week, @MetaAI introduced NLLB-200: a massive translation model supporting 200 languages.

Models are now available through the Hugging Face Hub, using 🤗Transformers' main branch.

Models on the Hub: huggingface.co/facebook/nllb-…

Learn about NLLB-200: ai.facebook.com/research/no-la…

10

The Technology Behind BLOOM Training🌸

Discover how @BigscienceW used @MSFTResearch DeepSpeed + @nvidia Megatron-LM technologies to train the World's Largest Open Multilingual Language Model (BLOOM):

huggingface.co/blog/bloom-meg…

11

Last week @MetaAI publicly released huge LMs, with up to ☄️30B parameters. Great win for Open-Source🎉

These checkpoints are now in 🤗transformers!

But how to use such big checkpoints?

Introducing Accelerate and

⚡️BIG MODEL INFERENCE⚡️

Load & USE the 30B model in colab (!)⬇️

13

💫 Perceiver IO by @DeepMind is now available in 🤗 Transformers!

A general purpose deep learning model that works on any modality and combinations thereof

📜text

🖼️ images

🎥 video

🔊 audio

☁️ point clouds

...

Read more in our blog post: huggingface.co/blog/perceiver

14

TODAY'S A BIG DAY

Spaces are now publicly available

Build, host, and share your ML apps on @huggingface in just a few minutes.

There's no limit to what you can build. Be creative, and share what you make with the community.

🙏 @streamlit and @Gradio

hf.co/spaces/launch

15

EleutherAI's GPT-J is now in 🤗 Transformers: a 6 billion, autoregressive model with crazy generative capabilities!

It shows impressive results in:

- 🧮Arithmetics

- ⌨️Code writing

- 👀NLU

- 📜Paper writing

- ...

Play with it to see how powerful it is:

huggingface.co/EleutherAI/gpt…

16

Document parsing meets 🤗 Transformers!

📄#LayoutLMv2 and #LayoutXLM by @MSFTResearch are now available! 🔥

They're capable of parsing document images (like PDFs) by incorporating text, layout, and visual information, as in the @Gradio demo below ⬇️

huggingface.co/spaces/nielsr/…

17

🚨It's time for another community event on July 7th-July 14th🚨

We've partnered up with @GoogleAI and @googlecloud to teach you how to use JAX/Flax for NLP & CV🚀

You define your project - we give you guidance, scripts & free TPU VMs 🤗

To participate:

discuss.huggingface.co/t/open-to-the-…

18

🤗Transformers v4.7.0 was just released with 🖼️DETR by @FacebookAI!

DETR is an Object Detection model that can take models from timm by @wightmanr as a backbone.

Contributed by @NielsRogge, try it out: colab.research.google.com/github/NielsRo…

v4.7.0 launches with support for PyTorch v1.9.0!

19

The first part of the Hugging Face Course is finally out!

Come learn how the 🤗 Ecosystem works 🥳: Transformers, Tokenizers, Datasets, Accelerate, the Model Hub!

Share with your friends who want to learn NLP, it's free!

Come join us at hf.co/course

20

We've heard your requests! Over the past few months ... we've been working on a Hugging Face Course!

The release is imminent. Sign-up for the newsletter to know when it comes out: huggingface.curated.co

Sneak peek; Transfer Learning with @GuggerSylvain:youtube.com/watch?v=BqqfQn…

21

GPT-Neo, the #OpenSource cousin of GPT3, can do practically anything in #NLP from sentiment analysis to writing SQL queries: just tell it what to do, in your own words. 🤯

How does it work? 🧐

Want to try it out? 🎮

👉 huggingface.co/blog/few-shot-…

22

🔥JAX meets Transformers🔥

@GoogleAI's JAX/Flax library can now be used as Transformers' backbone ML library.

JAX/Flax makes distributed training on TPU effortless and highly efficient!

👉 Google Colab: colab.research.google.com/github/hugging…

👉 Runtime evaluation:

github.com/huggingface/tr…

23

🤗 Transformers meets VISION 📸🖼️

v4.6.0 is the first CV dedicated release!

- CLIP @OpenAI, Image-Text similarity or Zero-Shot Image classification

- ViT @GoogleAI, and

- DeiT @FacebookAI, SOTA Image Classification

Try ViT/DeiT on the hub (Mobile too!):

huggingface.co/google/vit-bas…

24

Last week, EleutherAI released two checkpoints for GPT Neo, an *Open Source* replication of OpenAI's GPT-3

These checkpoints, of sizes 1.3B and 2.7B are now available in🤗Transformers!

The generation capabilities are truly🤯, try it now on the Hub: huggingface.co/EleutherAI/gpt…

25

🔥Fine-Tuning @FacebookAI's Wav2Vec2 for Speech Recognition is now possible in Transformers🔥

Not only for English but for 53 Languages🤯

Check out the tutorials:

👉 Train Wav2Vec2 on TIMIT huggingface.co/blog/fine-tune…

👉 Train XLSR-Wav2Vec2 on Common Voice

huggingface.co/blog/fine-tune…