1

2

No labeled data? No problem.

The 🤗 Transformers master branch now includes a built-in pipeline for zero-shot text classification, to be included in the next release.

Try it out in the notebook here: colab.research.google.com/drive/1jocViLo…

3

THIS IS BIG! 👀

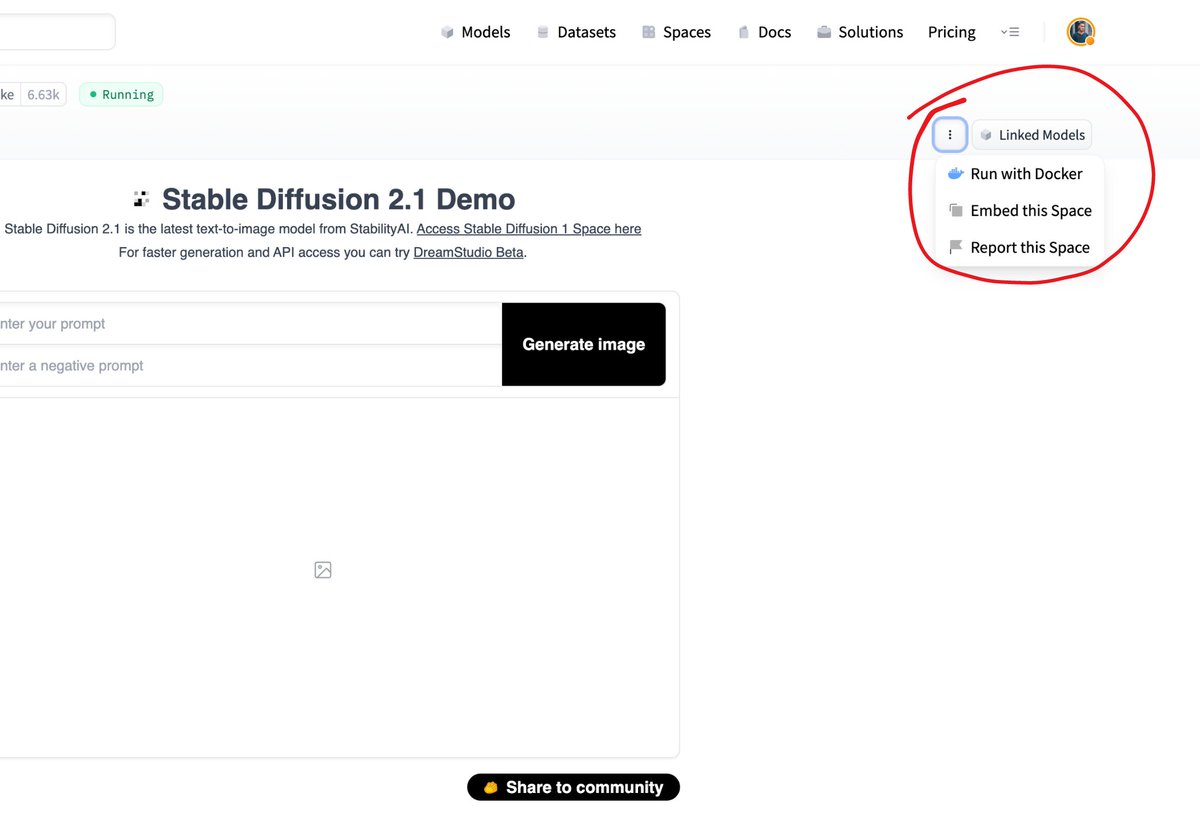

It's now possible to take any of the >30,000 ML apps from Spaces and run them locally (or on your own infrastructure) with the new "Run with @Docker" feature. 🔥🐳

See an app you like? Run it yourself in just 2 clicks🤯

4

Last week, EleutherAI released two checkpoints for GPT Neo, an *Open Source* replication of OpenAI's GPT-3

These checkpoints, of sizes 1.3B and 2.7B are now available in🤗Transformers!

The generation capabilities are truly🤯, try it now on the Hub: huggingface.co/EleutherAI/gpt…

5

🚨Transformers is expanding to Speech!🚨

🤗Transformers v4.3.0 is out and we are excited to welcome @FacebookAI's Wav2Vec2 as the first Automatic Speech Recognition model to our library!

👉Now, you can transcribe your audio files directly on the hub: huggingface.co/facebook/wav2v…

6

日本からの嬉しいお知らせです!rinnaが日本語で学習したJapanese Stable DiffusionがHugging Face Spacesでデモ化されました! huggingface.co/spaces/rinna/j…

7

🤗 Transformers meets VISION 📸🖼️

v4.6.0 is the first CV dedicated release!

- CLIP @OpenAI, Image-Text similarity or Zero-Shot Image classification

- ViT @GoogleAI, and

- DeiT @FacebookAI, SOTA Image Classification

Try ViT/DeiT on the hub (Mobile too!):

huggingface.co/google/vit-bas…

8

SAM, the groundbreaking segmentation model from @Meta is now in available in 🤗 Transformers!

What does this mean?

1. One line of code to load it, one line to run it

2. Efficient batching support to generate multiple masks

3. pipeline support for easier usage

More details: 🧵

9

Last week @MetaAI publicly released huge LMs, with up to ☄️30B parameters. Great win for Open-Source🎉

These checkpoints are now in 🤗transformers!

But how to use such big checkpoints?

Introducing Accelerate and

⚡️BIG MODEL INFERENCE⚡️

Load & USE the 30B model in colab (!)⬇️

10

Hugging Faceから日本へのお知らせです!

Hugging Faceコースの日本語翻訳を始めました。東北大学のStudent Ambassadorsの皆さんのお陰で第一章の翻訳が終了しました。

今後もコツコツと翻訳していきます。

是非コースを読んでHugging Face Tranformersについて学んで、使ってみてください!

12

🧨Diffusion models have been powering impressive ML apps, enabling DALL-E or Imagen

Introducing 🤗 diffusers: a modular toolbox for diffusion techniques, with a focus on:

🚄Inference pipelines

⏰Schedulers

🏭Models

📃Training examples

github.com/huggingface/di…

13

Document parsing meets 🤗 Transformers!

📄#LayoutLMv2 and #LayoutXLM by @MSFTResearch are now available! 🔥

They're capable of parsing document images (like PDFs) by incorporating text, layout, and visual information, as in the @Gradio demo below ⬇️

huggingface.co/spaces/nielsr/…

14

Fine-tuning a *3-billion* parameter model on a single GPU?

Now possible in transformers, thanks to the DeepSpeed/Fairscale integrations!

Thank you @StasBekman for the seamless integration, and thanks to @Microsoft and @FacebookAI teams for their support!

huggingface.co/blog/zero-deep…

15

Want speedy transformers models w/o a GPU?! 🧐

Starting with transformers v3.1.0 your models can now run at the speed of light on commodity CPUs thanks to ONNX Runtime quantization!🚀. Check out our 2nd blog post with ONNX Runtime on the subject! 🔥

medium.com/microsoftazure…

16

EleutherAI's GPT-J is now in 🤗 Transformers: a 6 billion, autoregressive model with crazy generative capabilities!

It shows impressive results in:

- 🧮Arithmetics

- ⌨️Code writing

- 👀NLU

- 📜Paper writing

- ...

Play with it to see how powerful it is:

huggingface.co/EleutherAI/gpt…

17

Today we are excited to announce a new partnership with @awscloud! 🔥

Together, we will accelerate the availability of open-source machine learning 🤝

Read the post 👉 huggingface.co/blog/aws-partn…

18

Release alert: the 🤗datasets library v1.2 is available now!

With:

- 611 datasets you can download in one line of python

- 467 languages covered, 99 with at least 10 datasets

- efficient pre-processing to free you from memory constraints

Try it out at:

github.com/huggingface/da…

19

🔥Fine-Tuning @FacebookAI's Wav2Vec2 for Speech Recognition is now possible in Transformers🔥

Not only for English but for 53 Languages🤯

Check out the tutorials:

👉 Train Wav2Vec2 on TIMIT huggingface.co/blog/fine-tune…

👉 Train XLSR-Wav2Vec2 on Common Voice

huggingface.co/blog/fine-tune…

20

GPT-Neo, the #OpenSource cousin of GPT3, can do practically anything in #NLP from sentiment analysis to writing SQL queries: just tell it what to do, in your own words. 🤯

How does it work? 🧐

Want to try it out? 🎮

👉 huggingface.co/blog/few-shot-…

21

The Technology Behind BLOOM Training🌸

Discover how @BigscienceW used @MSFTResearch DeepSpeed + @nvidia Megatron-LM technologies to train the World's Largest Open Multilingual Language Model (BLOOM):

huggingface.co/blog/bloom-meg…

22

The ultimate guide to encoder-decoder models!

Today, we're releasing part one explaining how they work and why they have become indispensable for NLG tasks such as summarization and translation.

> colab.research.google.com/drive/18ZBlS4t…

Subscribe for the full series: huggingface.curated.co

23

Last week, @MetaAI introduced NLLB-200: a massive translation model supporting 200 languages.

Models are now available through the Hugging Face Hub, using 🤗Transformers' main branch.

Models on the Hub: huggingface.co/facebook/nllb-…

Learn about NLLB-200: ai.facebook.com/research/no-la…

24

$40M series B! 🙏Thank you open source contributors, pull requesters, issue openers, notebook creators, model architects, twitting supporters & community members all over the 🌎!

We couldn't do what we do & be where we are - in a field dominated by big tech - without you!

25

We are honored to be awarded the Best Demo Paper for "Transformers: State-of-the-Art Natural Language Processing" at #emnlp2020 😍

Thank you to our wonderful team members and the fantastic community of contributors who make the library possible 🤗🤗🤗

aclweb.org/anthology/2020…