26

$40M series B! 🙏Thank you open source contributors, pull requesters, issue openers, notebook creators, model architects, twitting supporters & community members all over the 🌎!

We couldn't do what we do & be where we are - in a field dominated by big tech - without you!

27

The new SOTA is in Transformers! DeBERTa-v2 beats the human baseline on SuperGLUE and up to a crazy 91.7% dev accuracy on MNLI task.

Beats T5 while 10x smaller!

DeBERTa-v2 contributed by @Pengcheng2020 from @MSFTResearch

Try it directly on the hub: huggingface.co/microsoft/debe…

28

🚨Transformers is expanding to Speech!🚨

🤗Transformers v4.3.0 is out and we are excited to welcome @FacebookAI's Wav2Vec2 as the first Automatic Speech Recognition model to our library!

👉Now, you can transcribe your audio files directly on the hub: huggingface.co/facebook/wav2v…

29

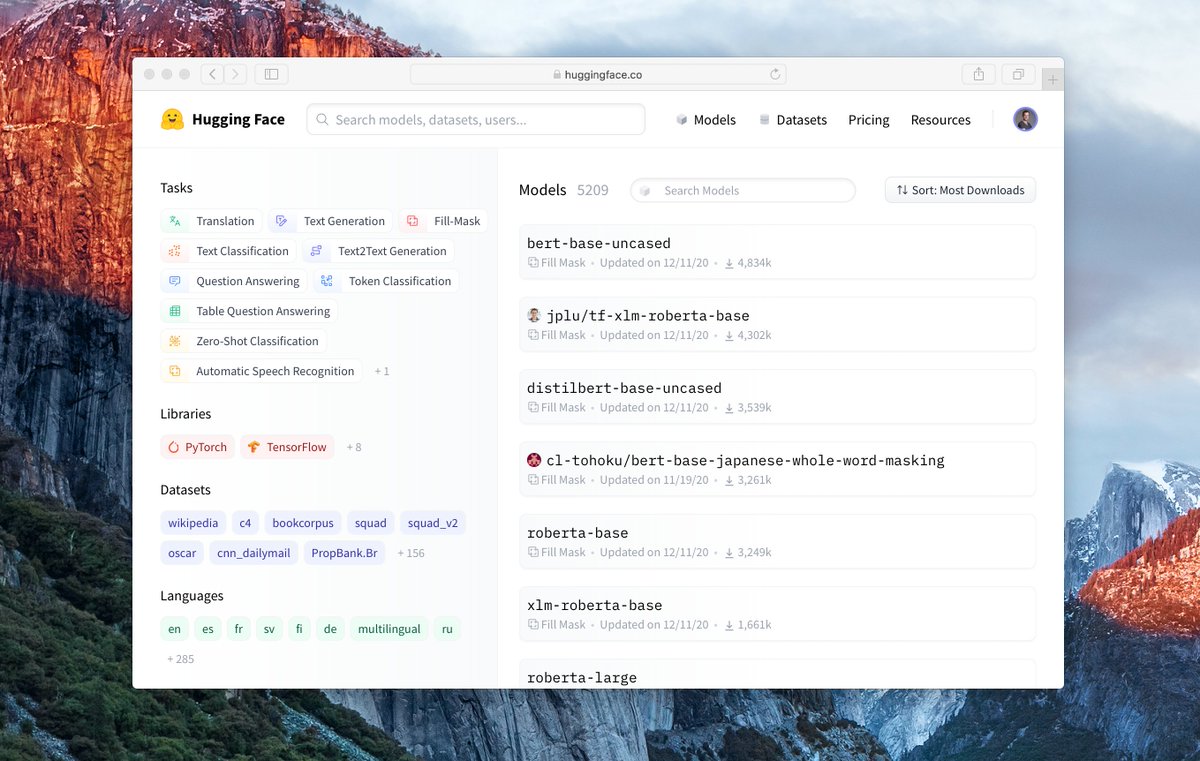

🔥We're launching the new huggingface.co and it's incredible

🚀Play live with +10 billion parameters models, deploy them instantly in production with our hosted API, join the 500 organizations using our hub to host/share models & datasets

And one more thing... 👇

30

Fine-tuning a *3-billion* parameter model on a single GPU?

Now possible in transformers, thanks to the DeepSpeed/Fairscale integrations!

Thank you @StasBekman for the seamless integration, and thanks to @Microsoft and @FacebookAI teams for their support!

huggingface.co/blog/zero-deep…

31

Release alert: the 🤗datasets library v1.2 is available now!

With:

- 611 datasets you can download in one line of python

- 467 languages covered, 99 with at least 10 datasets

- efficient pre-processing to free you from memory constraints

Try it out at:

github.com/huggingface/da…

32

We are honored to be awarded the Best Demo Paper for "Transformers: State-of-the-Art Natural Language Processing" at #emnlp2020 😍

Thank you to our wonderful team members and the fantastic community of contributors who make the library possible 🤗🤗🤗

aclweb.org/anthology/2020…

33

Our API now includes a brand new pipeline: zero-shot text classification

This feature lets you classify sequences into the specified class names out-of-the-box w/o any additional training in a few lines of code! 🚀

Try it out (and share screenshots 📷): huggingface.co/facebook/bart-…

34

The ultimate guide to encoder-decoder models!

Today, we're releasing part one explaining how they work and why they have become indispensable for NLG tasks such as summarization and translation.

> colab.research.google.com/drive/18ZBlS4t…

Subscribe for the full series: huggingface.curated.co

35

🔥Transformers' first-ever end-2-end multimodal demo was just released, leveraging LXMERT, SOTA model for visual Q&A!

Model by @HaoTan5, @mohitban47, with an impressive implementation in Transformers by @avalmendoz (@uncnlp)

Notebook available here: colab.research.google.com/drive/18TyuMfZ… 🤗

36

Want speedy transformers models w/o a GPU?! 🧐

Starting with transformers v3.1.0 your models can now run at the speed of light on commodity CPUs thanks to ONNX Runtime quantization!🚀. Check out our 2nd blog post with ONNX Runtime on the subject! 🔥

medium.com/microsoftazure…

37

38

No labeled data? No problem.

The 🤗 Transformers master branch now includes a built-in pipeline for zero-shot text classification, to be included in the next release.

Try it out in the notebook here: colab.research.google.com/drive/1jocViLo…